T5 (Text-To-Text Transfer Transformer) was developed by Google Research. T5's unique design transforms all NLP activities into a text-to-text format, with inputs and outputs represented as text strings. Because of its adaptability, T5 is a viable candidate for a wide range of NLP tasks, including text summarization.

When T5 is used to do sequential text summarization, the model first examines the source text before gradually selecting and structuring terms to construct a coherent summary. The secret to T5's efficacy is its fine-tuning technique, in which it is trained on a diverse set of text summarizing examples. As a result, the model can understand context, coherence, and relevance while sequentially summarizing.

The core idea of T5 is to frame all NLP tasks as between-text actions. In other words, both the model's input and output are treated as text, allowing for a unified and flexible approach to a wide range of NLP tasks such as text categorization, interpretation and synthesis, question answering, and more. This method simplifies the model's design and allows it to handle a wide range of tasks by conditioning the model on a task-specific prefix or label.

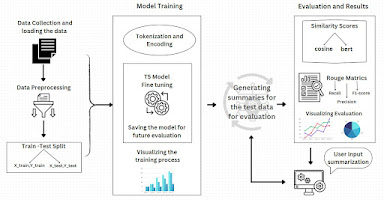

Figure 1 :Architecture of T5 model

Use cases and Applications:

There are numerous uses for T5 integration in content-based text summarization:

News and media: T5 can swiftly summarize news stories into summaries that follow a logical order, giving readers the important highlights.

Academic Research: Research papers and lengthy documents can be condensed in a way that maintains the flow of ideas and conclusions while yet being reader-friendly.

Legal and business documents: T5-powered sequential summarization makes it easier to review contracts, case files, and legal documents in the business and legal worlds.

Websites and apps can provide readers with abridged versions of articles to assist them decide whether to read the full piece or not.

Results:

Cosine Similarity:

The cosine similarity metric compares the similarity of two vectors in a multidimensional space. It computes the cosine of the angle between these vectors to obtain a normalized measure of similarity ranging from -1 (completely dissimilar) to 1 (completely similar). Cosine similarity is frequently used in natural language processing to determine how similar two embeddings of textual data are to one another, such as document summaries.

Figure 2: Graph Representing Cosine Similarity Scores

Bert similarity:

We are investigating BERT-based similarity scores utilizing the advanced Sentence Transformer model ('all-mpnet-base-v2') and the bert_score package in our research. BERT (Bidirectional Encoder Representations from Transformers) is a text interpretation system that excels at recognizing complex meanings in text. We want to use BERT-based similarity to improve how we evaluate summaries. This helps us comprehend the specifics and context of the prepared summaries.

Figure 3: Graph Representing Bert Similarity Scores Evaluation Matrix:

It is vital to investigate the efficacy of automatically generated summaries in order to improve summarizing systems. The ROUGE (Recall-Oriented Understudy for Gisting Evaluation) metric was used in this investigation to assess the quality of the summaries we prepared. ROUGE improves our comprehension of the summarization process's success by allowing us to measure the degree of overlap between the generated summary and the reference summary. We used the Rouge library to compute the average scores for the entire dataset by evaluating the individual scores for each summary pair. When F1-score, precision, and recall are evaluated, this increases our knowledge of the summarization system's overall efficacy.

Figure 4: Graphs Representing recall, precision and F1-score Conclusion:

The ability to condense important information while maintaining the text's original order and coherence distinguishes content-based text summarization using a sequential approach. Unlike systems that treat text as a collection of words, this method emphasizes the importance of maintaining contextual flow and sentence linkages. It captures the chronological and structural arrangements by examining the content sequentially, making it particularly effective in applications where information order is crucial. The model's capacity to recognize contextual nuances is critical to the method's effectiveness, which is typically accomplished utilizing advanced natural language processing techniques such as recurrent neural networks or transformers. The versatility of this technique is evidenced by its employment across a wide range of text genres, from news items to academic papers, ensuring that summaries maintain narrative structure. However, ambiguity and subjectivity difficulties arise, prompting ongoing attempts to improve contextual awareness and incorporate domain-specific information. Using pre-trained language models like T5 enhances summarization accuracy and contextual awareness.

.png)

.png)

.png)

Comments

Post a Comment